Introduction to Modern Data Access

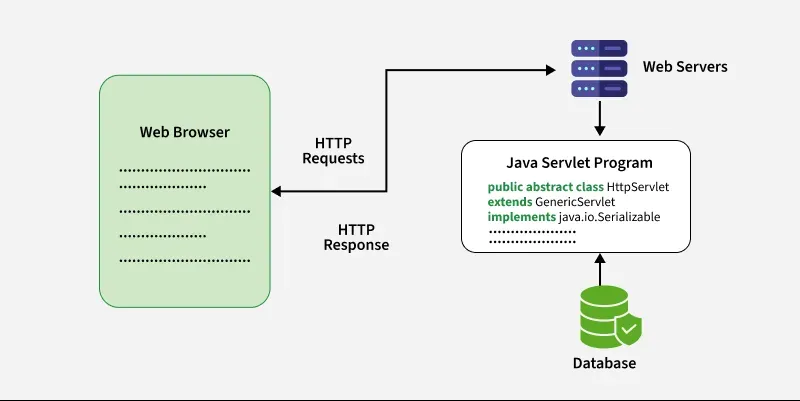

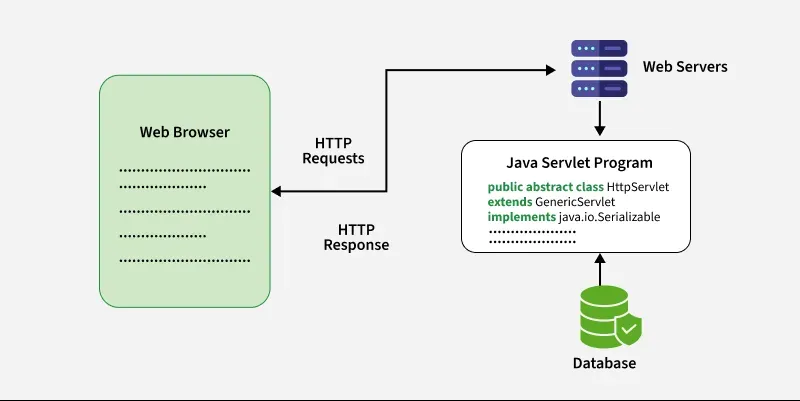

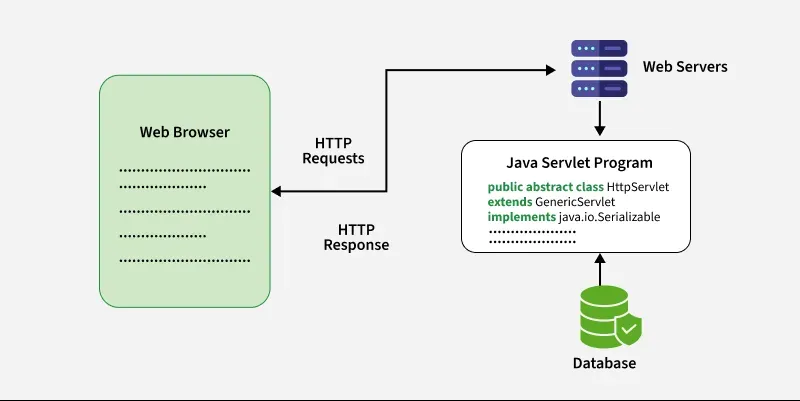

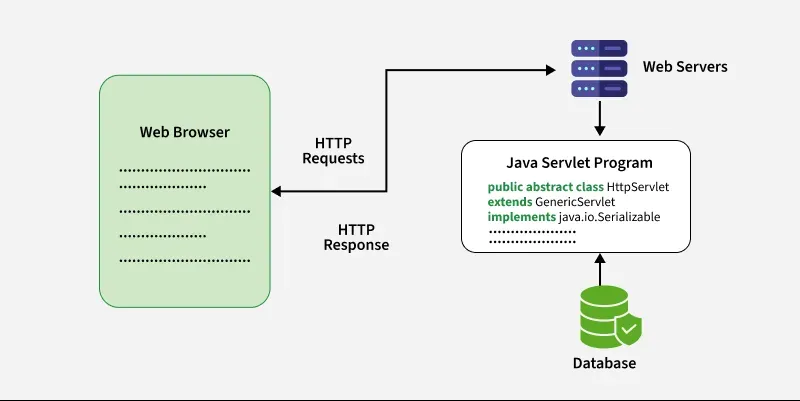

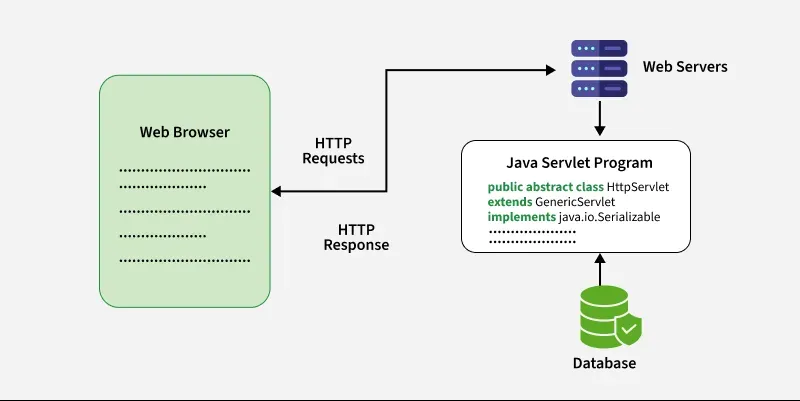

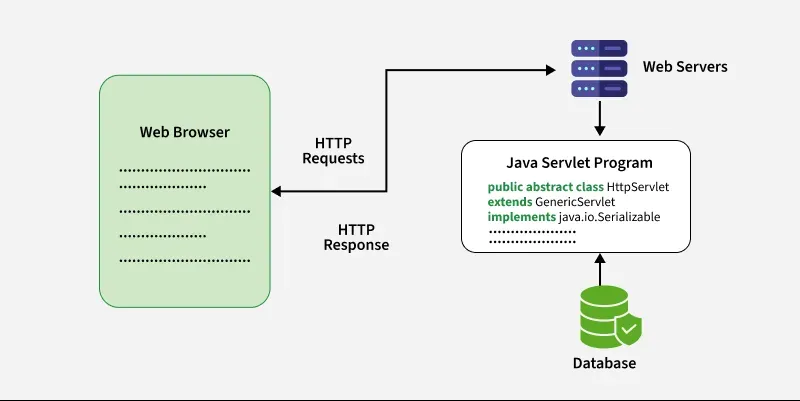

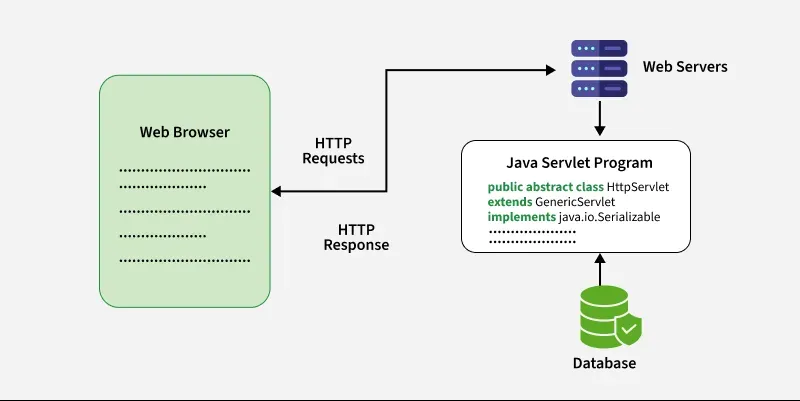

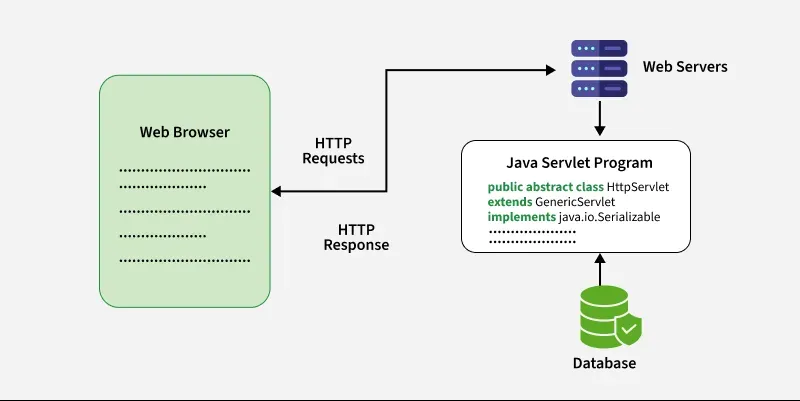

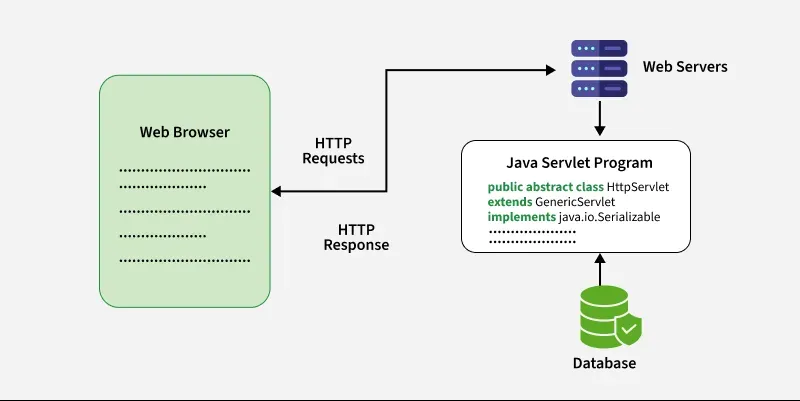

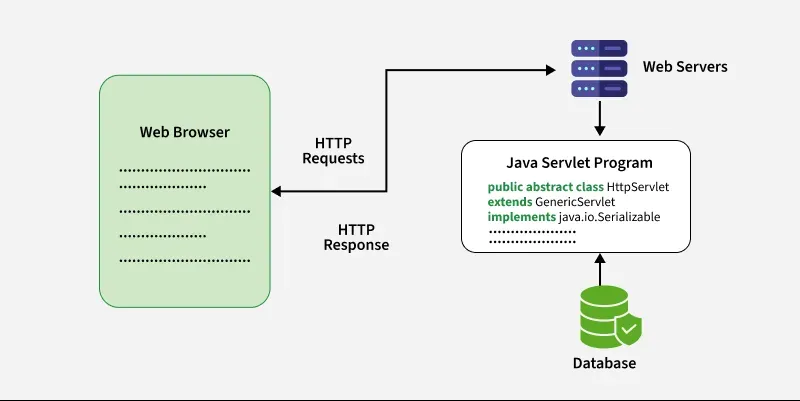

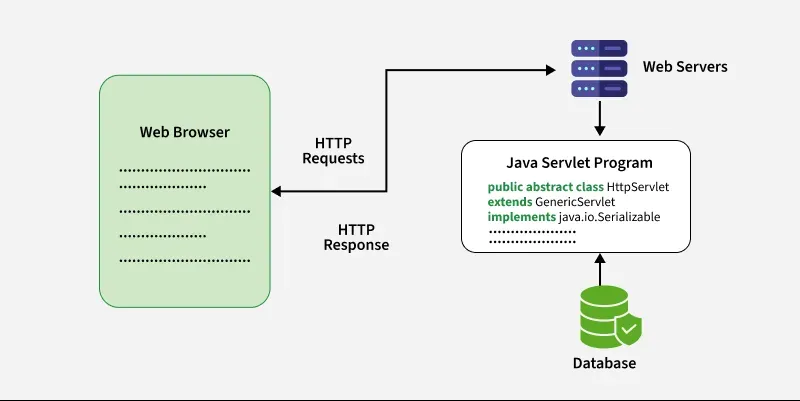

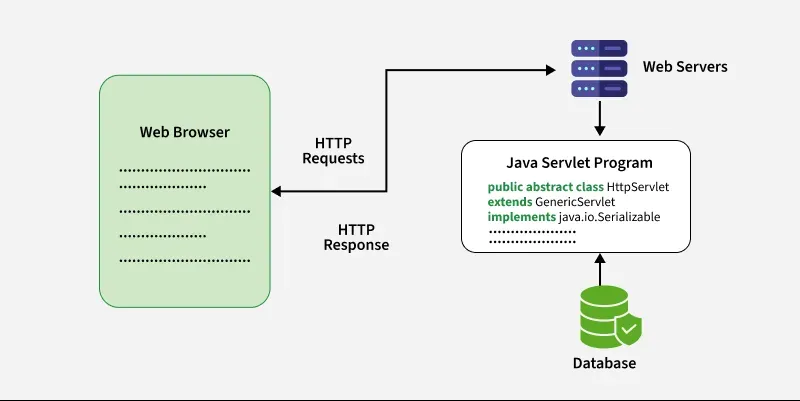

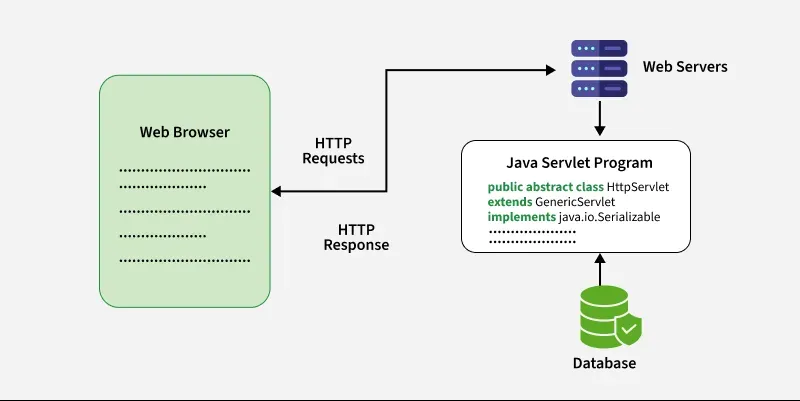

In the vast ecosystem of Java Development, few technologies are as fundamental and enduring as JDBC (Java Database Connectivity). While modern frameworks like Spring Boot, Hibernate, and Jakarta EE provide powerful abstractions for data persistence, they all rely on JDBC as the underlying engine. Understanding JDBC is not merely a lesson in history; it is a critical skill for Java Backend engineers aiming to optimize Java Performance, debug complex Java Enterprise applications, or develop custom solutions where Object-Relational Mapping (ORM) tools might introduce unnecessary overhead.

JDBC acts as the bridge between the Java application and the database, providing a standard API for connecting to a database, executing SQL commands, and processing results. Whether you are working with Java 17, the latest Java 21, or maintaining legacy systems, the principles of JDBC remain constant. It allows developers to write database-agnostic code while leveraging the specific capabilities of database vendors through drivers.

This comprehensive guide explores the depths of JDBC, moving beyond basic connectivity to advanced topics like connection pooling, batch processing, transaction management, and the nuances of integrating with ORMs. We will examine how to build high-concurrency applications, manage resources efficiently, and adhere to Java Best Practices to ensure Java Scalability and security.

Section 1: Core Concepts and Architecture

The JDBC Driver Manager and DataSource

At the heart of JDBC lies the driver architecture. A JDBC driver is a software component enabling a Java application to interact with a database. Historically, the DriverManager class was the primary entry point for establishing connections. However, in modern Java Architecture, specifically within Java Enterprise and Spring Boot environments, the use of a DataSource is preferred. The DataSource interface provides a more robust way to obtain connections and is essential for implementing connection pooling and distributed transactions.

When you include a dependency via Java Maven or Java Gradle, you are essentially pulling in the vendor-specific implementation (the driver) that adheres to the JDBC interfaces. This separation of concerns allows your business logic to remain decoupled from the specific database implementation, a core tenet of Clean Code Java.

Establishing a Connection: The Foundation

Let’s look at a fundamental example of establishing a connection. While frameworks hide this boilerplate, understanding the lifecycle is crucial for troubleshooting “connection leak” issues in Java Microservices.

package com.example.jdbc;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.SQLException;

import java.util.Properties;

public class ConnectionManager {

private static final String DB_URL = "jdbc:postgresql://localhost:5432/inventory";

private static final String USER = "admin";

private static final String PASS = "secure_password";

public static Connection getConnection() throws SQLException {

Properties props = new Properties();

props.setProperty("user", USER);

props.setProperty("password", PASS);

props.setProperty("ssl", "true");

// In modern Java, explicitly loading the driver class is often unnecessary

// due to SPI (Service Provider Interface), but it remains a classic pattern.

return DriverManager.getConnection(DB_URL, props);

}

public static void testConnection() {

// Using try-with-resources to ensure the connection closes automatically

// This is critical for Java Memory Management and preventing leaks.

try (Connection conn = getConnection()) {

if (conn != null) {

System.out.println("Connected to the database!");

System.out.println("Driver: " + conn.getMetaData().getDriverName());

}

} catch (SQLException e) {

System.err.println("Connection Failed: " + e.getMessage());

}

}

}In the code above, we utilize the try-with-resources statement, introduced in Java 7. This is a vital Java Best Practice. It ensures that the Connection object, which implements AutoCloseable, is closed regardless of whether the operations succeed or throw an exception. Failing to close connections is a primary cause of application failure in Java Cloud deployments like AWS Java or Azure Java environments.

Section 2: Implementation Details and Data Manipulation

Secure Data Access with PreparedStatement

One of the most significant risks in Java Web Development is SQL Injection. Using the basic Statement interface to concatenate strings into SQL queries opens the door for attackers to manipulate your database. The solution is the PreparedStatement.

PreparedStatement pre-compiles the SQL statement, separating the query structure from the data. This not only enhances Java Security but often improves performance because the database can cache the execution plan. Whether you are doing Android Development or building a Java REST API, PreparedStatement is non-negotiable.

Reading and Mapping Data

When retrieving data, JDBC returns a ResultSet. Mapping this result set to Java objects (POJOs) is where things can get verbose. While libraries like JPA (Java Persistence API) automate this, doing it manually gives you granular control, which is sometimes necessary for complex reporting or high-performance scenarios where the overhead of an ORM is undesirable.

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.util.ArrayList;

import java.util.List;

public class ProductRepository {

private static final String SELECT_BY_CATEGORY =

"SELECT id, name, price, description FROM products WHERE category = ?";

public List findByCategory(String category) {

List products = new ArrayList<>();

try (Connection conn = ConnectionManager.getConnection();

PreparedStatement pstmt = conn.prepareStatement(SELECT_BY_CATEGORY)) {

// Binding parameters protects against SQL Injection

pstmt.setString(1, category);

try (ResultSet rs = pstmt.executeQuery()) {

while (rs.next()) {

Product product = new Product();

product.setId(rs.getLong("id"));

product.setName(rs.getString("name"));

product.setPrice(rs.getBigDecimal("price"));

// Handling potential NULL values or CLOBs

product.setDescription(rs.getString("description"));

products.add(product);

}

}

} catch (SQLException e) {

// In a real Java Spring app, you would translate this to a custom DataAccessException

throw new RuntimeException("Error fetching products", e);

}

return products;

}

// Simple POJO inner class for demonstration

public static class Product {

private Long id;

private String name;

private java.math.BigDecimal price;

private String description;

// Getters and Setters omitted for brevity

public void setId(Long id) { this.id = id; }

public void setName(String name) { this.name = name; }

public void setPrice(java.math.BigDecimal price) { this.price = price; }

public void setDescription(String description) { this.description = description; }

}

} This pattern is the bedrock of data access. Even when using Hibernate, the framework is generating similar code under the hood. Understanding how ResultSet cursors work helps in JVM Tuning and memory management, especially when processing large datasets.

Section 3: Advanced Techniques for High Concurrency

Connection Pooling with HikariCP

Creating a new database connection is an expensive operation involving network handshakes and authentication. In a high-concurrency environment, such as a Java Spring Boot application serving thousands of requests, opening a new connection for every request will kill performance. This is where Connection Pooling comes in.

A connection pool maintains a cache of open connections that can be reused. HikariCP is currently the industry standard for high-performance JDBC connection pooling and is the default in Spring Boot 2.0+. It is lightweight and extremely fast.

Properly configuring the pool is essential for Java Scalability. You must balance the pool size against the database’s capability to handle concurrent connections.

import com.zaxxer.hikari.HikariConfig;

import com.zaxxer.hikari.HikariDataSource;

import java.sql.Connection;

import java.sql.SQLException;

import javax.sql.DataSource;

public class DataSourceConfig {

private static HikariDataSource dataSource;

static {

HikariConfig config = new HikariConfig();

config.setJdbcUrl("jdbc:postgresql://localhost:5432/inventory");

config.setUsername("admin");

config.setPassword("secure_password");

// Performance Tuning Settings

config.setMaximumPoolSize(20); // Scale based on thread count and DB capacity

config.setMinimumIdle(5);

config.setIdleTimeout(30000);

config.setConnectionTimeout(20000);

// Caching PreparedStatements can significantly boost performance

config.addDataSourceProperty("cachePrepStmts", "true");

config.addDataSourceProperty("prepStmtCacheSize", "250");

config.addDataSourceProperty("prepStmtCacheSqlLimit", "2048");

dataSource = new HikariDataSource(config);

}

public static Connection getConnection() throws SQLException {

return dataSource.getConnection();

}

public static DataSource getDataSource() {

return dataSource;

}

}Batch Processing

When inserting or updating large volumes of data, executing one query at a time is inefficient due to network latency (the “round-trip” time). JDBC Batch processing allows you to group multiple updates into a single network packet, drastically improving Java Performance.

import java.sql.Connection;

import java.sql.PreparedStatement;

import java.sql.SQLException;

import java.util.List;

public class BatchService {

public void bulkInsertProducts(List products) {

String sql = "INSERT INTO products (name, price, description) VALUES (?, ?, ?)";

try (Connection conn = DataSourceConfig.getConnection();

PreparedStatement pstmt = conn.prepareStatement(sql)) {

// Disable auto-commit to manage the transaction manually

conn.setAutoCommit(false);

int count = 0;

for (ProductRepository.Product p : products) {

pstmt.setString(1, p.getName());

pstmt.setBigDecimal(2, p.getPrice());

pstmt.setString(3, p.getDescription());

pstmt.addBatch();

// Execute in chunks to avoid memory issues

if (++count % 100 == 0) {

pstmt.executeBatch();

}

}

// Execute remaining

pstmt.executeBatch();

// Commit the transaction

conn.commit();

} catch (SQLException e) {

e.printStackTrace();

// In a real app, you would handle rollback here

}

}

} Handling Large Objects (BLOBs and CLOBs)

Modern applications often need to store large data, such as images, PDFs, or massive JSON documents. JDBC handles these via BLOB (Binary Large Object) and CLOB (Character Large Object). When dealing with these, it is crucial to use streams to avoid loading the entire object into the Java Heap, which would trigger excessive Garbage Collection.

Implementing streaming for these fields ensures that your application remains responsive and doesn’t crash with OutOfMemoryError, a key consideration in Docker Java and Kubernetes Java deployments where memory limits are strict.

Section 4: Best Practices and Optimization

Transaction Management

Transactions ensure data integrity. In JDBC, connections are in auto-commit mode by default. For complex business logic involving multiple updates, you must disable auto-commit. This adheres to the ACID properties (Atomicity, Consistency, Isolation, Durability).

While Spring Framework manages this via the @Transactional annotation, understanding the underlying conn.commit() and conn.rollback() is vital for debugging Java Concurrency issues like deadlocks or dirty reads.

Handling Database Dialects

One of the challenges in Java Database development is the slight variation in SQL syntax between vendors (PostgreSQL, MySQL, Oracle, SQL Server). ORMs like Hibernate solve this using “Dialects.” A Dialect informs the ORM how to generate SQL for a specific database version.

However, when using raw JDBC, you are responsible for the SQL syntax. If you are building a product that supports multiple databases, you must abstract your SQL generation logic. Sometimes, standard drivers have limitations with specific data types or newer database features. In such cases, developers might need to extend drivers or implement custom wrappers to handle specific behaviors, ensuring that high-level abstractions like Hibernate can function correctly on top of the JDBC layer.

Monitoring and Observability

In a Java DevOps culture, observability is key. JDBC drivers often provide hooks for logging and monitoring. Tools like P6Spy can wrap your JDBC driver to log the exact SQL being executed and the time it takes. This is invaluable for identifying slow queries and optimizing Java Architecture.

Conclusion

JDBC remains the unsung hero of the Java Ecosystem. While high-level frameworks like Spring Data and Hibernate provide excellent productivity boosts, they are abstractions that can sometimes leak or perform poorly if the underlying mechanics are misunderstood. By mastering JDBC—understanding connection lifecycles, pooling with tools like HikariCP, securing queries with PreparedStatements, and managing transactions manually—you empower yourself to build robust, high-performance Java Applications.

As the landscape evolves with Java 21 and the introduction of virtual threads (Project Loom), JDBC drivers are adapting to become more blocking-friendly or evolving into asynchronous counterparts like R2DBC (Reactive Relational Database Connectivity). However, for the vast majority of enterprise Java Development, blocking JDBC with a well-tuned connection pool remains the standard for reliability and speed.

Whether you are debugging a legacy monolith or building the next generation of Java Microservices, the ability to drop down to the JDBC layer is a superpower that distinguishes a good developer from a great one.